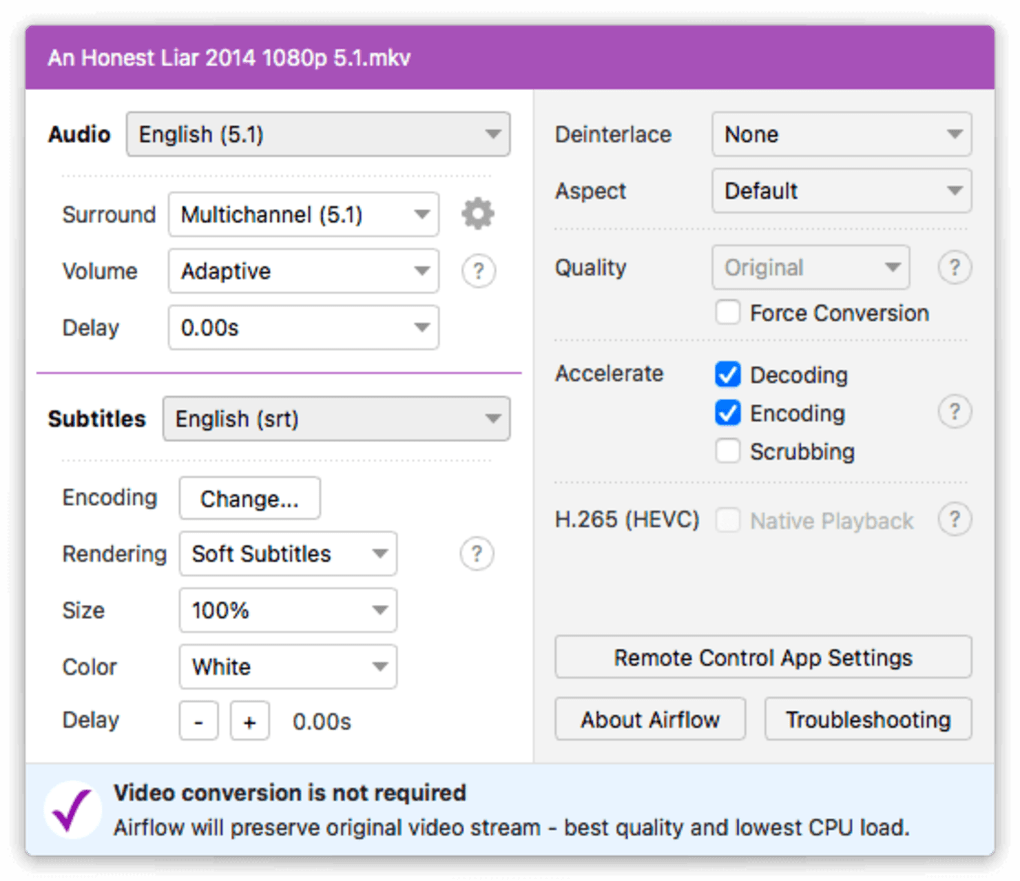

Now that we have gotten our repo up, it is time to configure and set up our dbt project.īefore we begin, let's take some time to understand what we are going to do for our dbt project.Īs can be seen in the diagram below, we have 3 csv files bookings_1, bookings_2 and customers. Your tree repository should look like this We would now need to create a dbt project as well as an dags folder.įor the dbt project, do a dbt init dbt - this is where we will configure our dbt later in step 4.įor the dags folder, just create the folder by doing mkdir dags env _PIP_ADDITIONAL_REQUIREMENTS=dbt=0.19.0 This way dbt will be installed when the containers are started. We would now need to create additional file with additional docker-compose parameters. The dbt is the folder in which we configured our dbt models and our CSV files. The dags is the folder where the Airflow DAGs are placed for Airflow to pick up and analyse. We will be now adjusting our docker-compose file - add in our 2 folders as volumes. To do so lets do a curl of the file onto our local laptop curl -LfO '' Next, we will get our docker-compose file of our Airflow.

A simple working Airflow pipeline with dbt and Snowflakeįirst, let us create a folder by running the command below mkdir dbt_airflow & cd "$_".

Airflow tutorial install#

Please install Docker Desktop on your desired OS by following the Docker setup instructions. We will be running Airflow as a container.

Airflow tutorial code#

Use that link in VS Code or your favorite IDE to clone the repo to your computer. If you have at least one file in your repository then click on the green Code icon near the top of the page and copy the HTTPS link. For connection details about your Git repository, open the Repository and copy the HTTPS link provided near the top of the page.

Airflow tutorial how to#

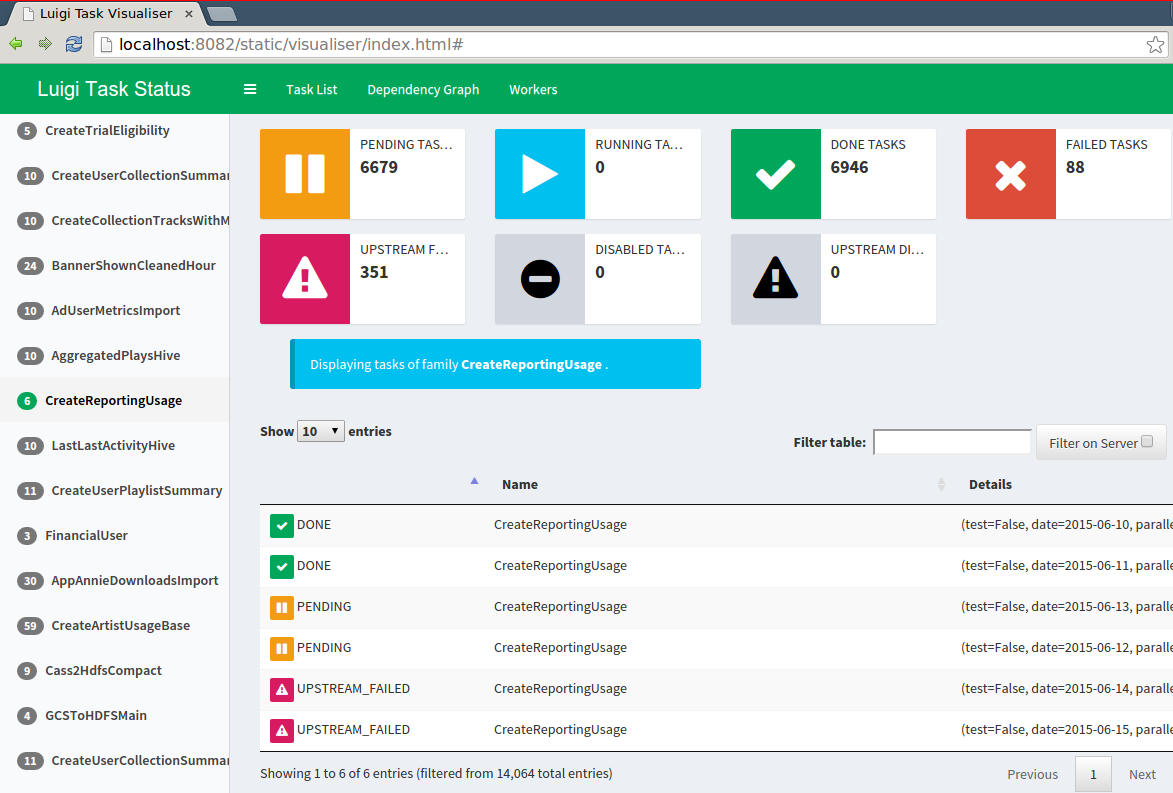

how to build scalable pipelines using dbt, Airflow and Snowflake.how do we write a DAG and upload it onto Airflow.how to use an opensource tool like Airflow to create a data scheduler.This guide assumes you have a basic working knowledge of Python and dbt What You'll Learn In this virtual hands-on lab, you will follow a step-by-step guide to using Airflow with dbt to create data transformation job schedulers.

dbt CLI is the command line interface for running dbt projects.

Airflow uses worklows made of directed acyclic graphs (DAGs) of tasks.ĭbt is a modern data engineering framework maintained by dbt Labs that is becoming very popular in modern data architectures, leveraging cloud data platforms like Snowflake. Snowflake is Data Cloud, a future proof solution that can simplify data pipelines for all your businesses so you can focus on your data and analytics instead of infrastructure management and maintenance.Īpache Airflow is an open-source workflow management platform that can be used to author and manage data pipelines. Numerous business are looking at modern data strategy built on platforms that could support agility, growth and operational efficiency. Data Engineering with Apache Airflow, Snowflake & dbt

0 kommentar(er)

0 kommentar(er)